Antwort Is there an AI that explains anything? Weitere Antworten – Is there AI that learns itself

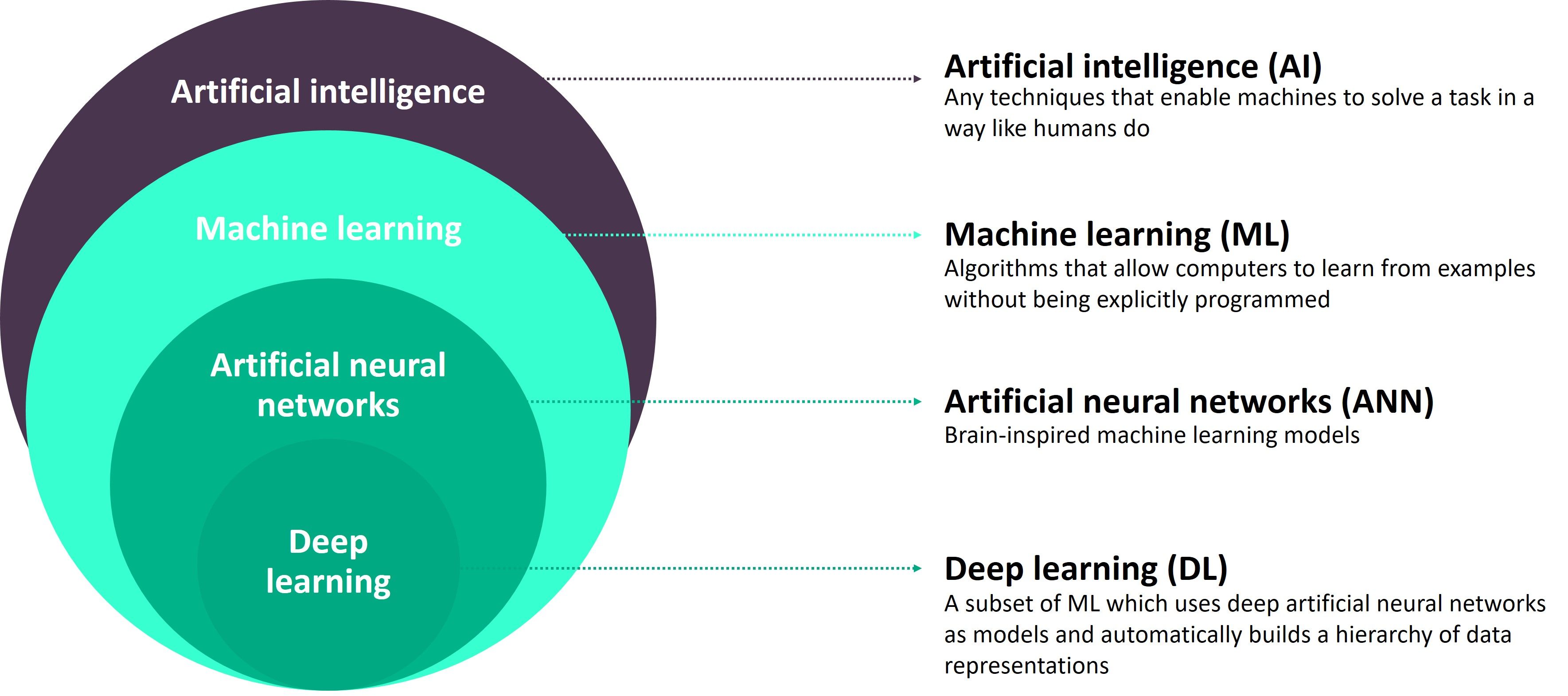

Artificial intelligence (AI) can learn both from being explicitly programmed with rules and also on its own through a process called machine learning.While AI-based machines are fast, accurate, and consistently rational, they lack intuition, emotions, and cultural sensitivity, which are crucial qualities that humans possess. Machine intelligence and human intelligence differ from each other.We define Unexplainability as the impossibility of providing an explanation for certain decisions made by an intelligent system which is both 100% accurate and comprehensible.

Do we have real AI yet : At some point in the future there might be, but despite all of the hype, it's not imminent, and it certainly doesn't exist yet. We don't even have any good evidence that it's possible to create true AI, though, equally, there's no reason to believe that it isn't.

Is fully conscious AI possible

According to IIT, conventional computer systems, and thus current-day AI, can never be conscious—they don't have the right causal structure. (The theory was recently criticized by some researchers, who think it has gotten outsize attention.)

Can an AI trick a human : This will likely remain the difference between artificial intelligence and human intelligence for some time: when AI tricks people or other AI models, it does so because it has been trained to do so. Humans tend to develop this trait completely on their own.

Real-life AI risks

There are a myriad of risks to do with AI that we deal with in our lives today. Not every AI risk is as big and worrisome as killer robots or sentient AI. Some of the biggest risks today include things like consumer privacy, biased programming, danger to humans, and unclear legal regulation.

AI cannot answer questions requiring inference, a nuanced understanding of language, or a broad understanding of multiple topics.

What is the scariest AI theory

Roko's basilisk states that humanity should seek to develop AI, with the finite loss becoming development of AI and the infinite gains becoming avoiding the possibility of eternal torture. However, like its parent, Roko's basilisk has widely been criticized.With virtual assistants and chatbots being able to simulate meaningful human interactions, AI has evolved far beyond mere functionality. It appears that humans are not only capable of forming deep emotional connections with AI but can even experience romantic love towards it.The Economist predicts that robots will have replaced 47% of the work done by humans by 2037, even traditional jobs. According to David Levy, AI researcher, Humanoid robots, robots that provide emotional support to humans, will be a feature of 2050.

ChatGPT's intelligence is zero, but it's a revolution in usefulness, says AI expert. Pascal Kaufmann says the way to measure true artificial intelligence is by its capability for dealing with previously unseen problems.

Will AI never be self-aware : Unless it can replicate the natural processes of evolution, AI will never be truly self-aware. What's more, humanity will never be able to upload its consciousness into a machine — the very concept is nonsensical. That's the verdict from an academic and computer expert who spoke to Cybernews.

Is ChatGPT sentient : While ChatGPT is a highly advanced AI system, it is not sentient and does not bring us closer to the Singularity. It is a tool that can be used to generate human-like text and perform a variety of language-based tasks, but it does not possess consciousness or self-awareness.

Did GPT-4 lie to make money

ChatGPT will lie, cheat and use insider trading when under pressure to make money, research shows. Scientists trained GPT-4 to be an AI trader for a fictional financial institution — and it performed insider trading when put under pressure to do well.

The difference between the two groups was greatest when considering the risks posed by AI. The median superforecaster reckoned there was a 2.1% chance of an AI-caused catastrophe, and a 0.38% chance of an AI-caused extinction, by the end of the century.“My guess is that we'll have AI that is smarter than any one human probably around the end of next year,” Musk told Nicolai Tangen, CEO of Norges Bank Investment Management, in an interview livestreamed on X, as reported by the Financial Times.

What is the hardest question in AI : Questions that are open-ended or ambiguous: These questions are difficult for AI because they require the AI system to be able to interpret the question in a variety of ways. For example, if you ask an AI "What is the best way to solve world hunger", the AI may not be able to answer because there is no single "bes…